Abstract

Many existing Python libraries implement algorithms that ensure fairness in machine learning. For example, Fairlearn (Weerts et al., 2023) and aif360 (Bellamy et al., 2018) provide tools for mitigating bias in single-stage machine learning predictions under statistical assocoiation based fairness criterion such as demographic parity and equal opportunity. However, they do not focus on counterfactual fairness, which defines an individual-level fairness concept from a causal perspective, and they cannot be easily extended to the reinforcement learning setting in general. Additionally, ml-fairness-gym (D’Amour et al., 2020) allows users to simulate unfairness in sequential decision-making, but it neither implements algorithms that reduce unfairness nor addresses CF. To our knowledge, Wang et al. (2025) is the first work to study CF in RL. Correspondingly, PyCFRL is also the first code library to address CF in the RL setting.

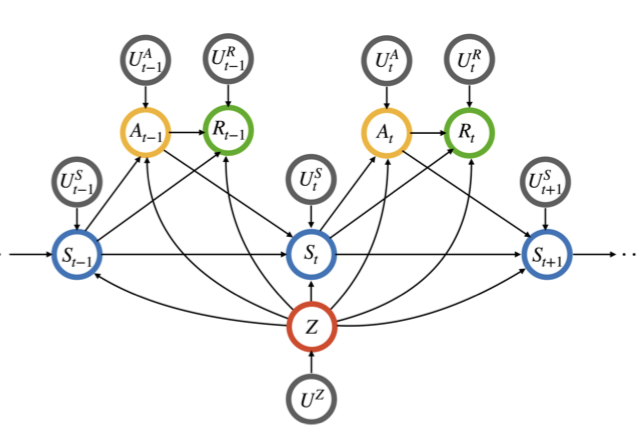

The contribution of PyCFRL is two-fold. First, it implements a data preprocessing algorithm that ensures CF in offline RL. For each individual in the data, the preprocessing algorithm sequentially estimates the counterfactual states under different sensitive attribute values and concatenates all of the individual’s counterfactual states at each time point into a new state vector. The preprocessed data can then be directly used by existing RL algorithms for policy learning, and the learned policy should be counterfactually fair up to finite-sample estimation accuracy. Second, it provides a platform for assessing RL policies based on CF. After passing in any policy and a data trajectory from the environment of interest, users can estimate the value and CF level achieved by the policy in the environment of interest. The library is publicly available on PyPI and Github (link), and detailed tutorials can be found in the PyCFRL documentation (link).